Revamp Your Python Programming : 5 Essential Tips for Excelling in Pydantic-AI

How to Use Pydantic-AI: A Complete Guide

Introduction

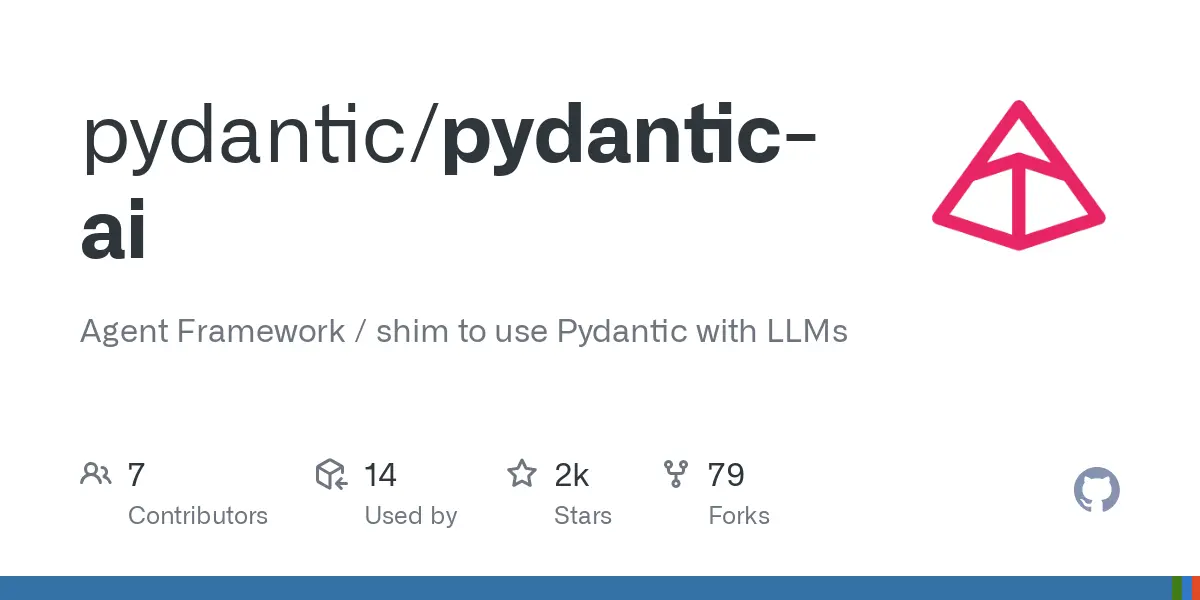

In the world of modern software development, data validation is crucial, especially when dealing with AI-driven projects. This is where Pydantic-AI comes into play. Pydantic-AI builds upon the powerful Pydantic library by enhancing it for use with artificial intelligence (AI) models. But what exactly is it, and why should you care? Let’s dive into the details of Pydantic-AI and explore how you can leverage its capabilities to streamline your projects.

- Introduction

- What is Pydantic-AI?

- Why use Pydantic-AI?

- Understanding the Basics of Pydantic-AI

- What is Pydantic?

- What does Pydantic-AI add to the mix?

- Getting Started with Pydantic-AI

- Installation and Setup

- Basic Requirements

- Creating a Simple Model with Pydantic-AI

- Defining Models in Pydantic-AI

- Using Pydantic for Data Validation

- Integrating Pydantic-AI with Machine Learning Models

- Why Pydantic-AI is Useful in AI Models

- Example of Using Pydantic-AI with an AI Model

- Working with Data Validation

- The Importance of Data Validation in AI

- Handling Invalid Data with Pydantic-AI

- Advanced Features of Pydantic-AI

- Type Hints and Data Models

- Nested Models and Complex Data Structures

- Performance Optimization with Pydantic-AI

- How Pydantic-AI Improves Performance

- Tips for Optimizing Model Performance

- Error Handling and Debugging with Pydantic-AI

- Common Errors in Pydantic-AI

- Debugging and Error Handling Techniques

- Testing with Pydantic-AI

- Writing Unit Tests for Pydantic Models

- How to Test Complex Data Structures

- Real-World Use Cases of Pydantic-AI

- Example 1: API Data Validation

- Example 2: Integrating with a Data Science Pipeline

- Security Considerations in Using Pydantic-AI

- Potential Security Risks

- How to Mitigate These Risks

- Best Practices for Using Pydantic-AI

- General Best Practices for Pydantic Models

- How to Avoid Common Mistakes

- Troubleshooting Common Issues in Pydantic-AI

- Issues with Performance

- Common Model Validation Problems

- Conclusion

- Summarizing the Benefits of Pydantic-AI

- Future of Pydantic-AI in AI Development

- FAQs

- What is the difference between Pydantic and Pydantic-AI?

- Can I use Pydantic-AI for non-AI projects?

- How does Pydantic-AI handle missing data?

- Is Pydantic-AI compatible with other machine learning frameworks?

- How can I learn more about Pydantic-AI?

What is Pydantic-AI?

Pydantic-AI is an extension of Pydantic, a popular Python library used for data validation and settings management. Pydantic-AI takes this a step further, focusing on providing more AI-specific tools and features for managing data models, validating input, and integrating seamlessly with machine learning systems.

Why Use Pydantic-AI?

If you’re building machine learning models, data handling can quickly become a mess without proper validation. Pydantic-AI ensures that your data is clean, valid, and structured correctly before it’s fed into an AI system, helping to prevent issues down the road. It saves you time, reduces errors, and enhances the overall performance of your AI model.

Revamp Your Python Programming : 5 Essential Tips for Excelling in Pydantic-AI

Understanding the Basics of Pydantic-AI

What is Pydantic?

Pydantic is a data validation and settings management library for Python that uses Python type annotations to define and enforce data types. Pydantic ensures that the data provided to your Python models is consistent and meets the expected format before being processed. It is commonly used in web development, APIs, and anywhere structured data is required.

Revamp Your Python Programming : 5 Essential Tips for Excelling in Pydantic-AI

What Does Pydantic-AI Add to the Mix?

Pydantic-AI is designed specifically to bridge the gap between data validation and machine learning. It extends Pydantic’s capabilities to help developers handle more complex data, integrate smoothly with machine learning frameworks, and enhance data validation for AI models. This allows you to focus more on your AI model’s functionality rather than worrying about data preprocessing.

Getting Started with Pydantic-AI

Installation and Setup

To get started with Pydantic-AI, you’ll first need to install it. Since it’s based on Pydantic, you can install it via pip:

bashCopy codepip install pydantic-ai

Ensure that your Python version is up-to-date, and you have all the necessary dependencies for AI work (like TensorFlow, PyTorch, etc.).

Basic Requirements

Pydantic-AI works best when used in conjunction with frameworks such as TensorFlow, PyTorch, or any other machine learning library that requires data validation. Make sure you have these frameworks installed if you’re working on an AI project.

Creating a Simple Model with Pydantic-AI

Defining Models in Pydantic-AI

Pydantic-AI allows you to define models that encapsulate the structure of your data. Here’s a simple example:

pythonCopy codefrom pydantic import BaseModel

from pydantic_ai import AIModel

class MyModel(AIModel):

name: str

age: int

is_active: bool

In this example, we define a model where name is a string, age is an integer, and is_active is a boolean. Pydantic-AI will validate that the data matches these types before using it in your AI models.

Using Pydantic for Data Validation

Data validation is a core feature of Pydantic-AI. If you try to create an instance of MyModel with incorrect data, Pydantic-AI will raise an error:

pythonCopy codemodel = MyModel(name="John", age="twenty", is_active=True) # Raises ValidationError

This helps you catch errors early in the development process.

Integrating Pydantic-AI with Machine Learning Models

Why Pydantic-AI is Useful in AI Models

When dealing with AI, data is everything. If the data is invalid, the results can be catastrophic. By using Pydantic-AI for data validation, you ensure that your models receive clean, consistent data. This improves the reliability and accuracy of your AI models.

Example of Using Pydantic-AI with an AI Model

Here’s an example where we use Pydantic-AI to validate data before feeding it into a simple machine learning model:

pythonCopy codefrom sklearn.linear_model import LogisticRegression

# Define the model

model = LogisticRegression()

# Prepare some data

data = MyModel(name="Alice", age=30, is_active=True)

# Assuming data is preprocessed and validated

X = [[data.age]] # Feature: age

y = [1 if data.is_active else 0] # Target: 1 for active, 0 for inactive

# Train the machine learning model

model.fit(X, y)

In this case, Pydantic-AI ensures that the input data is valid before the model is trained.

Working with Data Validation

The Importance of Data Validation in AI

In AI development, the importance of data validation cannot be overstated. Models trained with incorrect or incomplete data may fail to perform as expected. By using Pydantic-AI for data validation, you reduce the chances of errors and increase the reliability of your AI models.

Handling Invalid Data with Pydantic-AI

Pydantic-AI provides built-in mechanisms to handle invalid data gracefully. When invalid data is encountered, it raises clear error messages, making it easier for you to identify and fix issues before they affect your AI model.

Advanced Features of Pydantic-AI

Type Hints and Data Models

Pydantic-AI takes full advantage of Python’s type hints to define data models, ensuring that the data types are consistent throughout your application. This makes it easier to work with large datasets and helps avoid common type-related bugs.

Nested Models and Complex Data Structures

Pydantic-AI also supports nested models, which allows you to validate complex data structures, such as lists or dictionaries. This is especially useful when working with more intricate machine learning pipelines or multi-stage models.

Performance Optimization with Pydantic-AI

How Pydantic-AI Improves Performance

One of the standout features of Pydantic-AI is its ability to validate data efficiently. By using fast data validation techniques, it minimizes the overhead of data preprocessing, allowing your AI models to run more smoothly.

Tips for Optimizing Model Performance

To get the most out of Pydantic-AI, consider optimizing your data validation step. Use **batch validation to process large datasets at once and leverage model caching for repetitive validation tasks. This can significantly improve performance, especially when dealing with complex AI models that require processing vast amounts of data.

Revamp Your Python Programming : 5 Essential Tips for Excelling in Pydantic-AI

Revamp Your Python Programming : 5 Essential Tips for Excelling in Pydantic-AI

Error Handling and Debugging with Pydantic-AI

Common Errors in Pydantic-AI

While Pydantic-AI is robust, you may run into some common errors when using it for data validation. The most typical errors include:

- Validation Errors: If the data doesn’t match the expected type, a

ValidationErrorwill be raised. This is Pydantic-AI’s way of ensuring that only correctly structured data enters your model. - Type Mismatch: If a field expects a string but receives an integer, it will trigger an error.

- Missing Required Fields: Pydantic models require all specified fields. If any are missing, Pydantic-AI will throw a

ValidationError.

Debugging and Error Handling Techniques

Debugging with Pydantic-AI is relatively straightforward. The library provides clear and descriptive error messages that help pinpoint the issue. Here’s how you can handle and debug errors effectively:

- Use try-except blocks around model instantiations to catch validation errors:pythonCopy code

try: model = MyModel(name="Bob", age="twenty", is_active=True) except ValidationError as e: print(f"Validation error: {e}") - Utilize the

errors()method to get a more detailed breakdown of what went wrong:pythonCopy codemodel = MyModel(name="Bob", age="twenty", is_active=True) print(model.errors())

This method will return a list of errors, helping you quickly identify the issue and fix it.

Testing with Pydantic-AI

Writing Unit Tests for Pydantic Models

Testing is crucial to ensure that your Pydantic-AI models work as expected. For unit testing, Python’s built-in unittest framework can be a great tool. Here’s an example:

pythonCopy codeimport unittest

from pydantic_ai import AIModel

from pydantic import ValidationError

class TestMyModel(unittest.TestCase):

def test_valid_data(self):

model = MyModel(name="John", age=25, is_active=True)

self.assertEqual(model.name, "John")

self.assertEqual(model.age, 25)

def test_invalid_data(self):

with self.assertRaises(ValidationError):

MyModel(name="John", age="not a number", is_active=True)

How to Test Complex Data Structures

Testing complex data structures in Pydantic-AI involves defining nested models and ensuring that each level of the model is validated correctly. For example:

pythonCopy codeclass AddressModel(BaseModel):

street: str

city: str

class UserModel(AIModel):

name: str

address: AddressModel

def test_user_model():

user_data = {"name": "Alice", "address": {"street": "123 Main St", "city": "New York"}}

user = UserModel(**user_data)

assert user.name == "Alice"

assert user.address.city == "New York"

Unit tests like this ensure that nested models are validated correctly at each level.

Real-World Use Cases of Pydantic-AI

Example 1: API Data Validation

One of the most common use cases for Pydantic-AI is validating data coming from external APIs. For instance, if you’re building an API that processes incoming user data, Pydantic-AI ensures that the incoming data is valid before your system processes it. This could include validating fields like email, phone number, and address.

Example 2: Integrating with a Data Science Pipeline

In a data science pipeline, you often need to validate the quality of incoming data before it’s used for model training. With Pydantic-AI, you can ensure that your data meets the expected schema and format before it’s passed to the model. This is particularly helpful in scenarios where data is sourced from multiple databases or data streams.

Security Considerations in Using Pydantic-AI

Potential Security Risks

While Pydantic-AI is a powerful tool, like any data validation system, it can pose security risks if not used correctly. For instance, if improperly handled, untrusted data sources could cause vulnerabilities. It’s essential to ensure that the data being validated comes from trusted and secured sources.

How to Mitigate These Risks

To mitigate these risks, always use secure coding practices:

- Validate inputs thoroughly to prevent injection attacks.

- Use authentication and authorization to ensure that sensitive data is protected.

- Ensure that only valid, well-structured data enters your model by utilizing Pydantic-AI’s strict validation rules.

Best Practices for Using Pydantic-AI

General Best Practices for Pydantic Models

- Define Clear Data Structures: Be explicit about the data types for each field to avoid ambiguity.

- Use Default Values: For optional fields, use default values to make your models more flexible and less prone to validation errors.

- Leverage Aliases: Use

aliasfor fields with different names to enhance compatibility with external APIs or databases.

How to Avoid Common Mistakes

- Don’t Skip Validation: Always ensure that data is validated before it enters your system.

- Avoid Complex Models: Try to keep your models simple and avoid unnecessary complexity, as this can reduce performance and readability.

- Handle Errors Gracefully: Always anticipate and handle potential validation errors using appropriate error handling strategies.

Troubleshooting Common Issues in Pydantic-AI

Issues with Performance

If you notice performance degradation, it could be due to inefficient validation steps, especially with large datasets. Consider optimizing your validation logic by:

- Using batch processing to handle multiple data entries at once.

- Leveraging cached validation where possible to reduce redundant computations.

Common Model Validation Problems

One common issue developers face is having inconsistent data types that lead to frequent validation errors. To avoid this, ensure that the data you are validating is consistently formatted before applying Pydantic-AI validation rules.

Conclusion

In summary, Pydantic-AI is an invaluable tool for managing data validation in AI-driven projects. It helps streamline your workflow by ensuring that the data fed into your machine learning models is clean, accurate, and properly structured. With its integration into machine learning pipelines, Pydantic-AI not only enhances the robustness of your models but also improves the overall quality and performance of your system.

As AI and machine learning technologies continue to evolve, using tools like Pydantic-AI can save you time, reduce errors, and help you build more efficient and reliable models.

Revamp Your Python Programming : 5 Essential Tips for Excelling in Pydantic-AI

FAQ

Pydantic is a general-purpose data validation tool, while Pydantic-AI is an extension of Pydantic specifically designed for AI applications, adding features to handle complex data structures and integrate with machine learning models.

While Pydantic-AI is optimized for AI workflows, you can still use it for general data validation tasks. However, Pydantic alone might be more suitable for non-AI projects.

Pydantic-AI uses default values for optional fields and provides error messages if required fields are missing. You can also use custom validators to handle missing or incomplete data more effectively.

Yes, Pydantic-AI can integrate with popular machine learning frameworks like TensorFlow, PyTorch, and Scikit-learn. It is designed to fit into your existing AI workflow.

You can refer to the official Pydantic-AI documentation for in-depth guides, examples, and tutorials to deepen your understanding of how to integrate it into your AI projects.